In today’s world, where data is becoming increasingly valuable, proper backup management is crucial for the security of information systems. In this article, I present an effective way to automate the backup of key configuration files in Proxmox-based systems using a simple bash script and Crontab configuration.

Bash Script for Backup of the /etc Directory

The /etc file contains critical system configuration files that are essential for the proper functioning of the operating system and various applications. Loss or damage to these files can lead to serious problems. Below, I present an effective script, backup-etc.sh, that allows for the automated backup of this directory:

|

1 2 3 4 |

#!/bin/bash date_now=$(date +%Y_%m_%d-%H_%M_%S) tar --use-compress-program zstd -cf /var/lib/vz/dump/vz-etc-$(hostname)-$date_now.tar.zst /etc/ find /var/lib/vz/dump/ -name vzup1-etc-* -type f -mtime +100 | xargs rm -f |

This script performs the following operations:

- Generates the current date and time, which are added to the name of the archive to easily identify individual copies.

- Uses the

tarprogram withzstdcompression to create an archived and compressed copy of the/etcdirectory. - Removes archives older than 100 days from the

/var/lib/vz/dump/location, thus ensuring optimal disk space management.

Adding Script to Crontab

To automate the backup process, the script should be added to crontab. Below is a sample configuration that runs the script daily at 2:40 AM:

|

1 2 |

# crontab -e 40 2 * * * /root/backup-etc.sh > /dev/null 2>&1 |

Redirecting output to /dev/null ensures that operations are performed quietly without generating additional output to standard output.

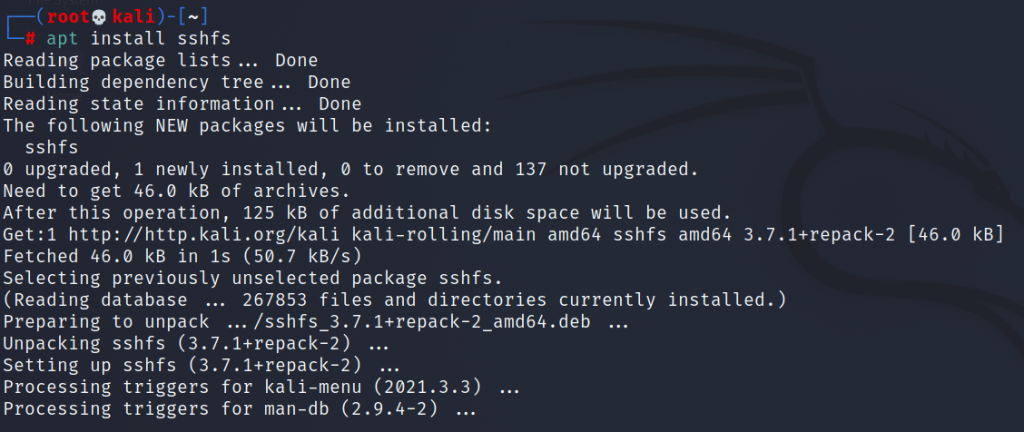

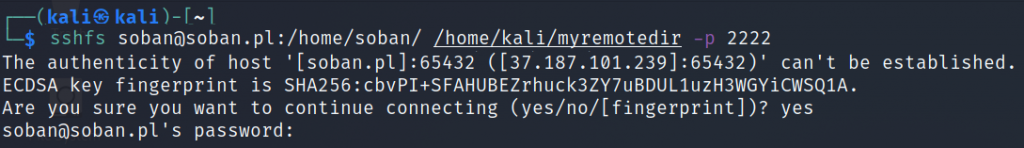

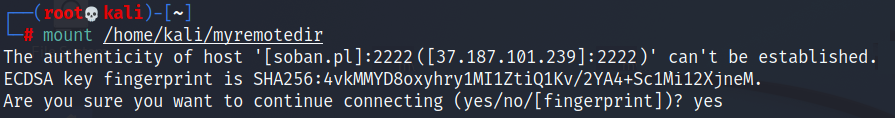

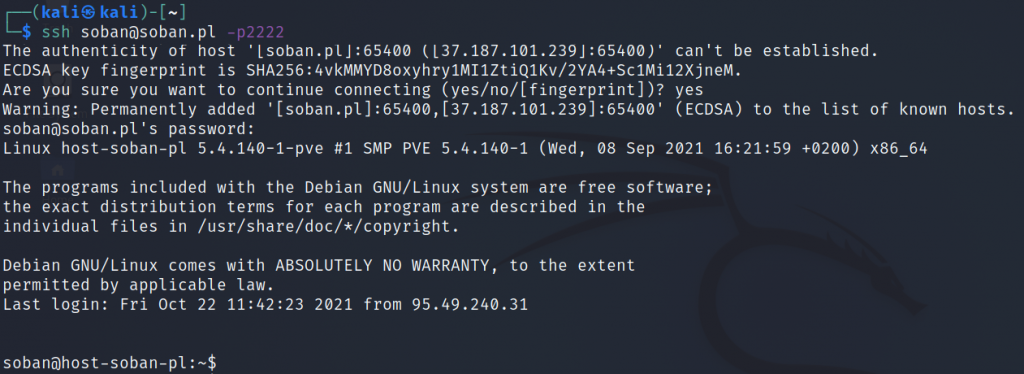

Download the Script from soban.pl

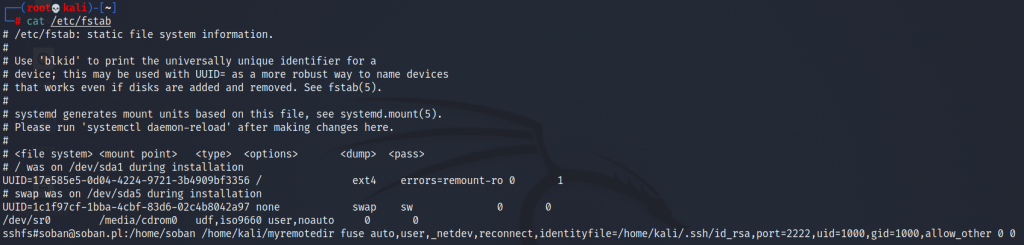

The backup-etc.sh script is also available for download from the soban.pl website. You can download it using the following wget command and immediately save it as /root/backup-etc.sh:

|

1 |

# wget -O /root/backup-etc.sh https://soban.pl/bash/backup-etc.sh && chmod +x /root/backup-etc.sh |

With this simple command, the script is downloaded from the server and granted appropriate executable permissions.

Benefits and Modifications

The backup-etc.sh script is flexible and can be easily modified to suit different systems. It is default placed in the /var/lib/vz/dump/ folder, which is a standard backup storage location in Proxmox environments. This simplifies backup management and can be easily integrated with existing backup solutions.

By keeping backups for 100 days, we ensure a balance between availability and disk space management. Old copies are automatically deleted, minimizing the risk of disk overflow and reducing data storage costs.

Summary

Automating backups using a bash script and Crontab is an effective method to secure critical system data. The backup-etc.sh script provides simplicity, flexibility, and efficiency, making it an excellent solution for Proxmox system administrators. I encourage you to adapt and modify this script according to your own needs to provide even better protection for your IT environment.