Introduction

Proxmox VE 9.0 (based on Debian 13 “Trixie”) has been released, and it brings updated packages, a new kernel, and improved stability. This guide will walk you through the in-place upgrade from Proxmox VE 8.4.x (Debian 12 “Bookworm”) to Proxmox VE 9.0.

The process will be automated using a ready-made upgrade script that:

- Checks your current version

- Runs the

pve8to9pre-upgrade check - Backs up your current APT sources

- Updates repositories from Bookworm to Trixie

- Performs a full dist-upgrade

- Logs all changes before and after the upgrade

Download and run the upgrade script

You can download the ready upgrade script directly from our server, make it executable, and run it:

|

1 2 3 4 |

wget https://soban.pl/bash/upgrade-pve8-to-9.sh -O /root/upgrade-pve8-to-9.sh chmod +x /root/upgrade-pve8-to-9.sh LOGF="/root/pve8to9.$(date +%F-%H%M%S).nohup.log"; nohup env NO_REBOOT=0 SKIP_PRECHECK=0 /root/upgrade-pve8-to-9.sh > "$LOGF" 2>&1 & echo $! >/run/pve8to9.pid tail -f "$LOGF" |

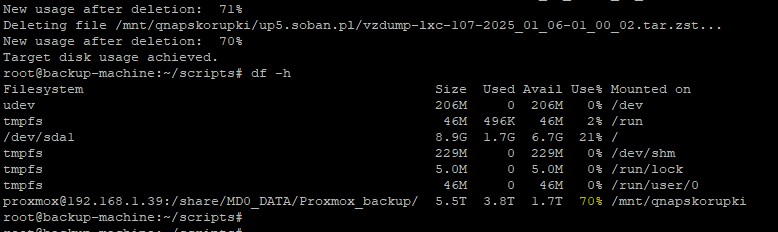

If you lose connection (which can happen during a Proxmox upgrade), you can follow the logs with:

|

1 |

tail -f /root/pve-upgrade-latest.log |

Be patient and wait for the script to finish — it may take some time.

Full script source

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 |

#!/bin/bash # Proxmox VE 8 -> 9 in-place upgrade (Debian 12 "bookworm" -> Debian 13 "trixie") # Hardened: nuke/lock Enterprise repo, move backups OUT of APT dir, ensure single no-sub, # robust pve8to9 detection/installation, switch to trixie, non-interactive upgrade, post-boot check. set -euo pipefail LOG="/root/pve-upgrade-$(date +%Y%m%d-%H%M%S).log" SINK="/etc/apt/disabled-sources" # OUTSIDE of APT scan path APT_BACKUP_DIR="/root/apt-sources-backup-$(date +%Y%m%d-%H%M%S)" NO_REBOOT="${NO_REBOOT:-0}" FORCE_UPGRADE="${FORCE_UPGRADE:-0}" SKIP_PRECHECK="${SKIP_PRECHECK:-0}" # Ensure sane PATH for non-interactive shells export PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:${PATH:-}" export DEBIAN_FRONTEND=noninteractive export APT_LISTCHANGES_FRONTEND=none export UCF_FORCE_CONFFOLD=1 shopt -s nullglob msg() { echo "$*" | tee -a "$LOG"; } die() { echo "ERROR: $*" | tee -a "$LOG"; exit 1; } ts() { date +%F-%H%M%S; } sink() { # move file out of sources.list.d safely local f="$1" base [[ -e "$f" ]] || return 0 base="$(basename "$f")" mkdir -p "$SINK" mv -f "$f" "$SINK/$base.bak.$(ts)" } divert_add() { local p="$1" if ! dpkg-divert --list | grep -Fq "diversion of $p"; then dpkg-divert --quiet --local --rename --add "$p" || true fi rm -f "$p" || true } cleanup_nonlist_sources() { mkdir -p "$SINK" find /etc/apt/sources.list.d -maxdepth 1 -type f \ ! -name '*.list' ! -name '*.sources' \ -exec mv -f {} "$SINK"/ \; || true } # STRICT verify: only fail on ACTIVE enterprise refs verify_no_enterprise() { local bad=0 # 1) Active lines in *.list (not commented) if grep -RIsn '^[[:space:]]*deb[[:space:]].*enterprise\.proxmox\.com' /etc/apt/sources.list /etc/apt/sources.list.d 2>/dev/null; then bad=1 fi # 2) Deb822 .sources that mention enterprise AND are effectively enabled while IFS= read -r -d '' f; do if grep -qi 'enterprise\.proxmox\.com' "$f"; then # treat missing Enabled as enabled, only "Enabled: no" is safe if ! grep -qi '^[[:space:]]*Enabled:[[:space:]]*no[[:space:]]*$' "$f"; then echo "[VERIFY] Enabled enterprise in: $f" | tee -a "$LOG" bad=1 fi fi done < <(find /etc/apt/sources.list.d -maxdepth 1 -type f -name '*.sources' -print0 2>/dev/null) (( bad == 0 )) || die "Enterprise repo still detected. Clean failed." } # Disabled deb822 stub WITHOUT enterprise domain (so greps never trip) write_disabled_enterprise_sources() { cat > /etc/apt/sources.list.d/pve-enterprise.sources <<'EOF' Types: deb URIs: https://example.invalid/proxmox # placeholder to avoid enterprise domain Suites: trixie Components: pve-enterprise Enabled: no EOF chmod 0644 /etc/apt/sources.list.d/pve-enterprise.sources } # --------- FIXED: robust locator for pve8to9 + debug ---------- locate_pve8to9() { hash -r 2>/dev/null || true local tool="" tool="$(type -P pve8to9 2>/dev/null || true)" [[ -z "$tool" ]] && tool="$(command -v pve8to9 2>/dev/null || true)" [[ -z "$tool" && -x /usr/bin/pve8to9 ]] && tool="/usr/bin/pve8to9" [[ -z "$tool" && -x /usr/sbin/pve8to9 ]] && tool="/usr/sbin/pve8to9" [[ -z "$tool" ]] && tool="$(/usr/bin/which pve8to9 2>/dev/null || true)" if [[ -z "$tool" ]] && command -v dpkg >/dev/null 2>&1; then local cand cand="$(dpkg -L pve-manager 2>/dev/null | grep -E '/pve8to9$' || true)" [[ -n "$cand" && -x "$cand" ]] && tool="$cand" fi echo "$tool" } run_pve8to9_precheck() { [[ "$SKIP_PRECHECK" == "1" ]] && { msg "SKIP_PRECHECK=1 set — skipping pve8to9."; return 0; } msg "Running pve8to9 --full precheck..." msg "PATH=$PATH" type -a pve8to9 2>&1 | sed 's/^/[type] /' | tee -a "$LOG" || true [[ -e /usr/bin/pve8to9 ]] && ls -l /usr/bin/pve8to9 | sed 's/^/[ls] /' | tee -a "$LOG" || true local tool; tool="$(locate_pve8to9)" if [[ -z "$tool" ]]; then msg "pve8to9 not found — attempting to ensure 'pve-manager' is installed..." apt-get update -y >> "$LOG" 2>&1 || true apt-get install -y pve-manager >> "$LOG" 2>&1 || true cleanup_nonlist_sources; verify_no_enterprise hash -r 2>/dev/null || true tool="$(locate_pve8to9)" fi if [[ -z "$tool" ]]; then msg "pve8to9 still not available on this system. Continuing without precheck." return 0 fi msg "pve8to9 found at: $tool" local tmp rc tmp="$(mktemp)" set +e "$tool" --full | tee -a "$LOG" | tee "$tmp" >/dev/null rc=${PIPESTATUS[0]} set -e if grep -qE 'FAILURES:\s*[1-9]+' "$tmp"; then rm -f "$tmp" die "pve8to9 reported FAILURES. Check log above." fi rm -f "$tmp" msg "pve8to9 executed (rc=$rc). No FAILURES." } # --------------------------------------------------------------- # ----- header / live log hint ----- echo "== Proxmox VE 8 -> 9 upgrade started: $(date) ==" | tee -a "$LOG" echo "$$" > /run/pve8to9.pid ln -sfn "$LOG" /root/pve-upgrade-latest.log echo "Live log: tail -f /root/pve-upgrade-latest.log" | tee -a "$LOG" echo "If started via nohup, also watch its file (example: /root/pve8to9.<ts>.nohup.log)" | tee -a "$LOG" # 0) PRE-NUKE ENTERPRISE + move backups OUT of APT dir msg "[PRE-NUKE] Backup APT lists and clean directory..." mkdir -p "$APT_BACKUP_DIR" cp -a /etc/apt/sources.list "$APT_BACKUP_DIR"/ 2>/dev/null || true cp -a /etc/apt/sources.list.d "$APT_BACKUP_DIR"/ 2>/dev/null || true cleanup_nonlist_sources # Remove any *.sources referencing Enterprise for s in /etc/apt/sources.list.d/*.sources; do [[ -f "$s" ]] || continue grep -qi 'enterprise\.proxmox\.com' "$s" && { msg " -> removing: $s"; sink "$s"; } done # Remove typical filenames sink /etc/apt/sources.list.d/pve-enterprise.sources sink /etc/apt/sources.list.d/pve-enterprise.list # For *.list with Enterprise: comment lines or remove if Enterprise-only for l in /etc/apt/sources.list.d/*.list; do [[ -f "$l" ]] || continue if grep -qi 'enterprise\.proxmox\.com' "$l"; then if awk 'BEGIN{IGNORECASE=1} /^[[:space:]]*deb/ && $0 !~ /enterprise\.proxmox\.com/ {ok=1} END{exit ok?0:1}' "$l"; then msg " -> commenting Enterprise lines in: $l" sed -ri 's|^\s*deb\s+https?://enterprise\.proxmox\.com|#DISABLED-BY-SCRIPT &|I' "$l" else msg " -> removing Enterprise-only list: $l" sink "$l" fi fi done # Comment Enterprise in main sources.list [[ -f /etc/apt/sources.list ]] && sed -ri 's|^\s*deb\s+https?://enterprise\.proxmox\.com|#DISABLED-BY-SCRIPT &|I' /etc/apt/sources.list || true # Diversions + disabled deb822 file (with example.invalid), then re-clean any .distrib/.bak divert_add /etc/apt/sources.list.d/pve-enterprise.list divert_add /etc/apt/sources.list.d/pve-enterprise.sources write_disabled_enterprise_sources cleanup_nonlist_sources verify_no_enterprise # 1) Sanity checks if [[ $EUID -ne 0 ]]; then die "Run as root."; fi if ! command -v pveversion >/dev/null 2>&1; then die "Not a Proxmox VE host."; fi CUR_VER_RAW="$(pveversion || true)"; msg "Current pveversion: $CUR_VER_RAW" if ! echo "$CUR_VER_RAW" | grep -qE 'pve-manager/8\.'; then msg "Expected Proxmox 8.x. Detected: $CUR_VER_RAW" [[ "$FORCE_UPGRADE" == "1" ]] || die "Set FORCE_UPGRADE=1 to override." fi # BEFORE snapshot { echo; echo "===== BEFORE UPGRADE ====="; date echo "# uname -a"; uname -a || true echo; echo "# pveversion"; pveversion || true echo; echo "# qm list"; qm list || true echo; echo "# pct list"; pct list || true } >> "$LOG" 2>&1 # 2) pve8to9 precheck (robust) run_pve8to9_precheck # 3) Keyring + initial apt refresh apt-get update -y >> "$LOG" 2>&1 || true apt-get install -y proxmox-archive-keyring >> "$LOG" 2>&1 || true verify_no_enterprise cleanup_nonlist_sources # 4) Switch bookworm -> trixie msg "Switching Debian repositories: bookworm -> trixie ..." sed -i 's/bookworm/trixie/g' /etc/apt/sources.list || true find /etc/apt/sources.list.d -maxdepth 1 -type f -exec sed -i 's/bookworm/trixie/g' {} \; || true # 5) Ensure single no-subscription entry sed -ri '/download\.proxmox\.com\/debian\/pve.*pve-no-subscription/s/^/#DUPLICATE-BY-SCRIPT /' /etc/apt/sources.list || true for l in /etc/apt/sources.list.d/*.list; do [[ -f "$l" ]] || continue [[ "$l" == "/etc/apt/sources.list.d/pve-no-subscription.list" ]] && continue sed -ri '/download\.proxmox\.com\/debian\/pve.*pve-no-subscription/s/^/#DUPLICATE-BY-SCRIPT /' "$l" || true done cat > /etc/apt/sources.list.d/pve-no-subscription.list <<'EOF' deb http://download.proxmox.com/debian/pve trixie pve-no-subscription EOF chmod 0644 /etc/apt/sources.list.d/pve-no-subscription.list verify_no_enterprise cleanup_nonlist_sources # 6) Full non-interactive upgrade msg "Running apt-get update + non-interactive upgrade/dist-upgrade..." TMPU="$(mktemp)" apt-get update 2>&1 | tee -a "$LOG" | tee "$TMPU" >/dev/null if grep -q 'enterprise\.proxmox\.com' "$TMPU"; then rm -f "$TMPU"; die "APT tried to reach Enterprise during update."; fi rm -f "$TMPU" apt-get -o Dpkg::Options::="--force-confdef" -o Dpkg::Options::="--force-confold" upgrade -y | tee -a "$LOG" apt-get -o Dpkg::Options::="--force-confdef" -o Dpkg::Options::="--force-confold" dist-upgrade -y | tee -a "$LOG" # Second cleanup pass cleanup_nonlist_sources apt-get autoremove -y | tee -a "$LOG" || true apt-get update -y >> "$LOG" 2>&1 || true # 7) One-shot post-boot verification POST_SH="/root/pve-postcheck.sh" POST_SVC="/etc/systemd/system/pve-upgrade-postcheck.service" cat > "$POST_SH" <<'EOS' #!/bin/bash set -euo pipefail LOG_FILE="/root/pve-upgrade-post-$(date +%Y%m%d-%H%M%S).log" { echo "===== AFTER UPGRADE (first boot) ====="; date echo "# uname -a"; uname -a || true echo; echo "# pveversion"; pveversion || true echo; echo "# apt policy (trixie check)"; apt-cache policy | sed -n '1,120p' || true } >> "$LOG_FILE" 2>&1 systemctl disable --now pve-upgrade-postcheck.service >/dev/null 2>&1 || true rm -f /root/pve-postcheck.sh EOS chmod +x "$POST_SH" cat > "$POST_SVC" <<'EOS' [Unit] Description=Proxmox upgrade post-check (one-shot) After=multi-user.target pvedaemon.service pve-cluster.service Wants=network-online.target [Service] Type=oneshot ExecStart=/bin/bash /root/pve-postcheck.sh [Install] WantedBy=multi-user.target EOS systemctl daemon-reload systemctl enable pve-upgrade-postcheck.service >> "$LOG" 2>&1 || true # 8) Pre-reboot snapshot { echo; echo "===== PRE-REBOOT SNAPSHOT ====="; date echo "# Installed pve kernels:"; dpkg -l | grep -E '^ii\s+pve-kernel' | sort -V || true } >> "$LOG" 2>&1 msg "Upgrade phase completed. Log: $LOG" if [[ "$NO_REBOOT" == "1" ]]; then msg "NO_REBOOT=1 set — skipping reboot. Please reboot manually." else msg "Rebooting now to complete the upgrade..." sleep 3 reboot fi |

Below is the full content of the script for reference. It is recommended to download the latest version from the link above to ensure you have the most up-to-date fixes.

Post-upgrade verification

|

1 |

pveversion |

Expected output should look like:

pve-manager/9.x.x/xxxxxxxx (running kernel: 6.x.x-x-pve)

Check the running kernel version:

|

1 |

uname -a |

Review the upgrade log to confirm no errors occurred:

|

1 |

less /root/pve-upgrade-*.log |

If you used the post-check service created by the script, you can view its results:

|

1 |

less /root/pve-upgrade-post-*.log |